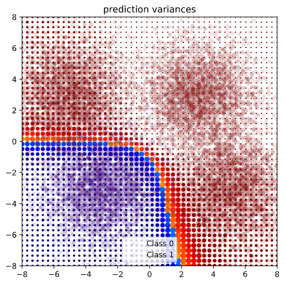

This is my summer project at PML Group, where I worked on Bayesian Deep Learning. Generally, there are two kinds of uncertainties in Bayesian Deep Learning. One is called epistemic uncertainty (uncertainty about network weight) and the other is aleatoric uncertainties (uncertainty about input data). In my project, I extended the Linear and Conv layers in Pytorch with a flow-based stochastic part, so that the extended module could learn the distribution of input uncertainties by variational inferences. My project report is here.